Build a kick ass Couchbase stack for under $1000

There are many articles with some great information on how to size your Couchbase cluster but rarely do they mention specific server specifications or details/pricing of tech stacks (Check out this great blog post for sizing Couchbase deployments).

In this article we are going to look at how you can build an awesome cloud based solution with a lot of headroom and power for Couchbase for under $1000!

While AWS and other cloud vendors such as Heroku and Digital Ocean and others provide a great service and a plethora of features we often find they can be pricey when you want power and performance can be erratic (unless you pay more for dedicated instances). We do love the ability to quickly spin up instances and AWS is one of our main resources for quickly testing out features. (note: these services are great and it's not bashing them, this is just a different approach).

However if you are comfortable with Linux administration and want some real bang for your buck then there are quality alternatives out there.

Enter Hetzner

Hetzner offer a whole host of dedicated and managed bare metal servers, cheap, reliable and most importantly powerful! We are going to construct an awesome collection of servers and services for under $1000.

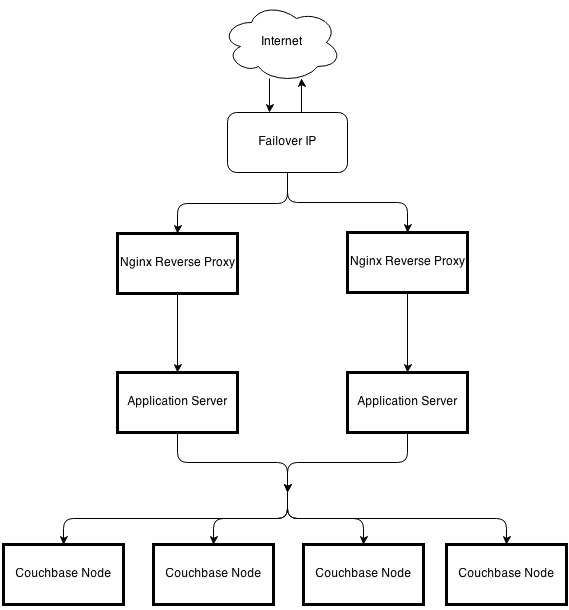

Our stack is going to be focused on both performance and redundancy. Our shopping list will look something like this:

- 2 Dedicated servers for our reverse proxy of choice (NGINX)

- Shared failover ip for redundancy

- 2 Dedicated servers for our application logic tier (be it Java/Ruby/Python or others)

- 4 Dedicated servers running as a 4 node Couchbase cluster.

Let's look in a litte more detail at each level! Below is a rough outline of how our server stack would be deployed. (Only one nginx instance would be active at a time and proxying requests to both application servers).

Reverse Proxy Tier

This is the barrier between the wild of the internet and our application, personally we love Nginx, it's fast,flexible and not a resource monster, still with Hetzner being so cheap we can provision two servers with a lot of muscle. We'll be ordering 2 EX40 servers with the additional fail over ip included. (This means we also need the flexi pack on both).

Failover IPs are additional IPs that can be switched from one server to another within a few seconds via the Hetzner API

Key Stats

- Intel® Core™ i7-4770

Quadcore Haswell - Clock speed of 3.4Ghz

- 32GB of RAM

- 2 x 2 TB SATA 6 Gb/s 7200 rpm

HDD (Software-RAID 1) - 20TB of included traffic

Application Logic Tier

Again we'll be ordering 2 of the EX40 servers for our application tier, they easily have enough power and can run more than one service. Personally we only use Java or Ruby and either can easily service 99% of use cases. The reason for two servers is mainly for redundancy, Nginx in our use case load balances between the 2 servers and if one crashes it'll route all traffic to the remaining server until it comes back up or is replaced.

Key Stats

See the stats from the proxy tier servers!

Couchbase Tier

For the Couchbase tier we are going to pick a more powerful server with more cores,ssd disk and extra RAM. These are always great to upgrade if you can. When using Couchbase views it is better to have additional cores available and the more RAM you have equals more of your working set being held in memory and thus being super fast for operations (as opposed to disk based access).

So we'll be ordering 4 of the PX90SSD for our nodes. Couchbase themselves always recommend to run 3 nodes in production as a minimum. We are going to go with 4 nodes as:

- It fits in our budget.

- Our cluster can support extra data.

- Spreading the data out over more nodes means that in case of a node going down a smaller subset of the data is compromised.

You really should be using replications in Couchbase (especially in production). We run in production with one replica set, so if one node was to go down a secondary node (the one holding the replica set) would be promoted to provide the data in place of the downed node. You can dial up the replica levels BUT it comes at a cost of additional RAM/Disk being needed and increased network traffic. It really depends on your user case, with a 4 node cluster and one replica set in certain instances we could survive two nodes going down.

The model we've chosen has a generous amount of RAM, a hexacore processer (hyper threaded too) and 2 x 240GB SSD's. SSD's are really great if your working set doesn't fit in RAM and also in ensuring the write queue drains quickly. If each node uses 50GB of the available RAM on this server the cluster will have a total ram size of 200GB while leaving plenty of RAM left over for the operating system!

Key Stats

- Intel® Xeon® E5-1650 v2

Hexacore - Clock speed of 3.5Ghz

- 68GB of RAM

- 2 x 240 GB SATA 6 Gb/s Data Center Series SSD

- 30TB of included traffic

Cons of this approach

We're not going to pretend this is a perfect setup, so let's take a brief look at some of the disadvantages. The biggest pain point for most people with this setup is that you need to be good with Linux. When you order the server it comes served with your distro of choice (we always pick Ubuntu 12.04LTS) and that's it (Hetzner do offer Windows as an OS also).

Everything is up to you, this includes setting up firewalls,ssh keys,users,setting swappiness and file open limits (both quite crucial for Couchbase). You have to be confident in your abilities to wire everything together correctly, that is one of the great things about services such as Heroku, it's point,click and deploy! You can pay more for Hetzner to manage more of your infrastructure but not everyone has a huge tech budget.

Secondly we selected SSD's on our Couchbase nodes for faster disk reads and writes but unfortunately they aren't the biggest disks ever (240GB). You have to be careful with data fragmentation. Couchbase has an append to file system approach, so your data set grows over time before compaction kicks in and reduces the file size. As noted on the Couchbase link at the top of the page your data size can grow to 2-3 times the actual size of your data before compaction. Especially if you are using large views you need to be aware of this, this can be mitigated by either:

- going with the PX90 which is the same server but with 2TB standard disks as opposed to SSD's

- adding more nodes to the cluster. As we wanted to keep the cost below $1000 and we wanted speed speed speed, we opted for the SSD version.

Pros of this approach

For under $1000 you've got an incredibly powerful cluster of servers, check out the other cloud vendors and see how much it'd cost for servers of equivalent specs! Yes, we know people will say that AWS/Heroku save developer time and that is the costliest thing and it's true but there is something to be said of having complete control of your servers and no resource contention (We're looking at you AWS!).

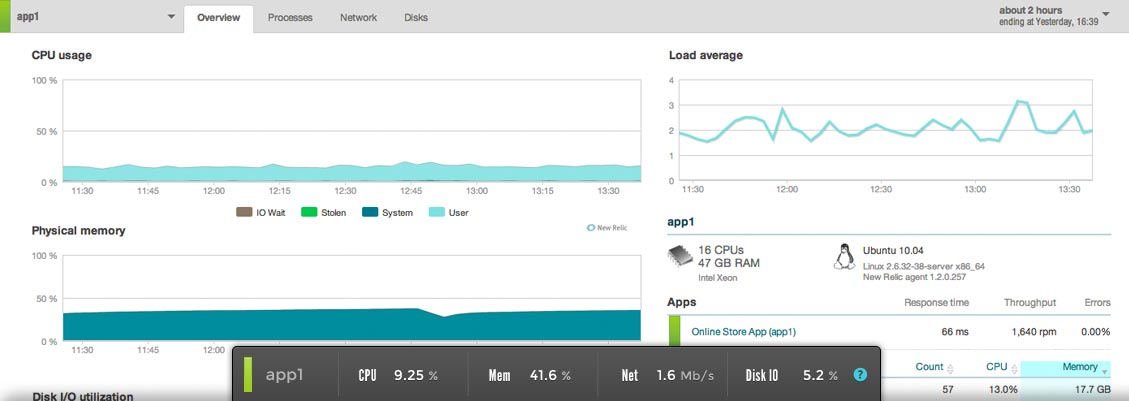

There is a lot of headroom in this stack for growth, we use an almost identical stack in prodution for a JSON REST API and with millions of operations every day we average sub 10ms response times.

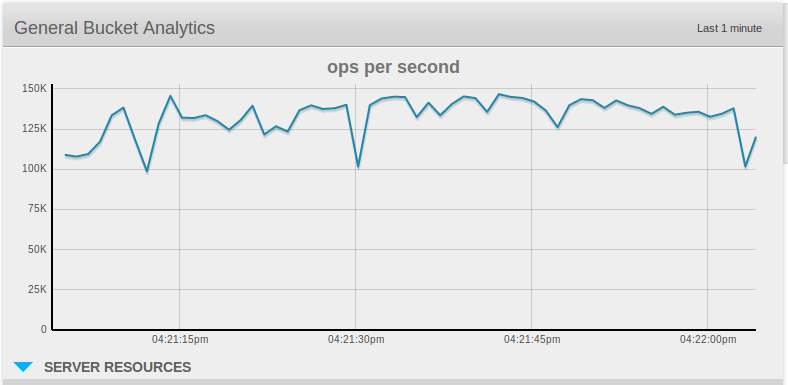

The highest we've pushed our cluster in production is to 20k operations a second, but in performance testing we'd pushed it to almost 150k operations! See below!

Conclusion and useful links

Full pricing breakdown

So our total shopping spree breakdowns like so (prices always rounded up):

4 x EX40 @ $68 for a total of $272

2 x Flexipack @ $28 for a total of $58

1 x Failover ip @ $7

4 x PX90SSD @ $152 for a total of $604

Total Cost: $941

(€678/£566)

Features to add to the stack

Server monitoring is a crucial thing to do, New relic offer a great free tier of server monitoring for as many servers as you want. You can monitor CPU/RAM, Disk and Network I/O, it also allows you to set up email alerts if certain conditions are breached. I.e. Monitor disk and ram for Couchbase! You can check it out here.

Security

Please please please if running your own cloud like this you need to make sure it is secure and that only ports that need to be open are available and they accept connections from specifics IPS only. If using Ubuntu then take a look at UFW for configuring firewalls.

An example command to only accept connections from a specific IP on a specific port using UFW would be like so:

sudo ufw allow proto tcp from YOUR_IP to any port 8092

Also check out fail2ban that scans for malicious exploits and other hacking attempts.

Deployment and provisioning

Once your Hetzner account is setup it takes around 1 hour for a new server to be provisioned (First servers take 2 days to verify your account). It's best to have a way to do automated deployments and server set up, the most used options are Chef, Puppet or Ansible. With a lot of headroom on each server and low prices you should easily be able to spot when you'll need to scale in advance and add servers when you need them!

Well that's all for this article, any comments,suggestions or queries then you can either leave a comment below or reach out to us on Twitter